Turns out you can use good old BGP to power your Kubernetes network! In this post I’ll cover how to build your Kubernetes network with BGP and how to use it for traffic engineering in your Kubernetes clusters! My hope is that this post gives you enough context to use BGP in your own Kubernetes clusters (where it makes sense), especially for those who are running Kubernetes on-premise.

Why BGP?

BGP (Border Gateway Protocol) is an industry standard for exchanging routing and reachability information among systems. BGP is an essential piece of the public internet as large networks across the globe are glued together using BGP. If you want to dig deeper into the specifics of BGP, I recommend checking out the IETF RFC on it.

If you are running Kubernetes on the cloud (AWS, Azure, DigitalOcean, GCP, etc), then using BGP likely won’t make sense. Your cloud provider either provides a SDN for your cluster or in some cases you may be stuck using an overlay network on top of the network that your VMs are running on. However, if you are running Kubernetes on-premise and you have the flexibility to configure your network, then BGP is one of the many options you can use to setup routes for your cluster. Using BGP opens up a lot of possibilities for your Kubernetes network such as routable Pod and Service IPs which you wouldn't be able to get in most cloud environments (yet).

How to Setup your Cluster with BGP

Configuring your Networking Devices

This is the shameful hand wavy part of my post where I say “it depends”. And in reality, it really does. In some cases, there may be a lot of configuration required since there are so many knobs we can tune with BGP. On top of that, there are thousands of different network devices from various vendors that will change the steps required to configure your network correctly. For this step, I highly recommend consulting a network engineer. The remainder of this blog will assume that you know how to configure routes, import/export policies, etc on your network devices.

Configuring your Kubernetes Cluster

What’s nice about using BGP on Kubernetes is that building your own solution is doable. There are rich APIs in various languages for using both Kubernetes (client-go, client-python, etc) and BGP (gobgp, exabgp, etc). If you don’t want to build your own though (understandable), there are a few projects out there already. My favourite among them is kube-router due to its ease of use, but other solutions like MetalLB and Calico may be a better fit for your use case.

kube-router offers three main features: service proxy with IPVS, network policies, and network routing. For this post, we’ll assume we only want to deploy kube-router with networking routing. To do so, deploy kube-router like so:

$ curl -LO https://raw.githubusercontent.com/cloudnativelabs/kube-router/v0.2.0-beta.7/daemonset/generic-kuberouter-only-advertise-routes.yaml

# open the file above and edit the following flags based on how your network is configured. Advise your network admin.

$ vim generic-kuberouter-only-advertise-routes.yaml

# edit this flag: --cluster-asn=65001

# edit this flag: --peer-router-ips=10.0.0.1,10.0.2

# edit this flag: --peer-router-asns=65000,65000

$ kubectl apply -f generic-kuberouter-only-advertise-routes.yaml

It’s important to note that kube-router assumes that no CNI plugin is installed in your cluster so it will try to install the bridge CNI plugin on all your nodes. You can turn this behaviour off by setting the flag --enable-cni=false.

For more details on how to install kube-router check out the user guide.

What it should look like

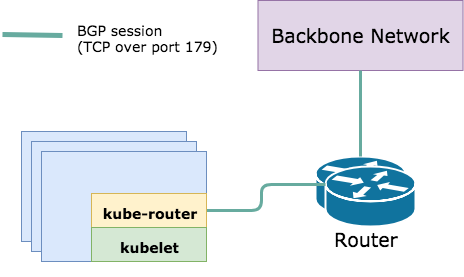

At this point you added the kube-router daemonset in your cluster meaning you have a BGP daemon running on every Kubernetes node. Your Kubernetes cluster is now BGP peering with the rest of your networking infrastructure assuming the immediate peers have links out to the rest of the network:

With BGP peering configured, your cluster can begin advertising pod and service IPs. A benefit of advertising pod IPs is that pods become like any other routable host in your data center. Since each Kubernetes node is advertising its own pod CIDR, we can rely on the underlying network to route packets for our pods regardless of where the request originates from. This removes the need for packet encapsulation or custom rules on a Linux routing table.

If we want to dig deeper into what the BGP session looks like, we can exec into any kube-router pod and see the following output as part of the kube-router welcome message (generated by gobgp):

Here's a quick look at what's happening on this Node

--- BGP Server Configuration ---

AS: 4206927100

Router-ID: 10.0.0.1

Listening Port: 179, Addresses: 10.0.0.1, ::

--- BGP Neighbors ---

Peer AS Up/Down State

10.1.1.1 4206210402 6d 01:23:15 Establ

10.1.1.2 4206210402 6d 01:23:12 Establ

--- BGP Route Info ---

Network Next Hop AS_PATH Age

*> 172.25.10.1/32 10.0.0.1 00:03:27

*> 172.25.10.2/32 10.0.0.1 00:03:27

*> 172.25.10.3/32 10.0.0.1 00:03:27

*> 172.25.10.16/32 10.0.0.1 00:03:27

*> 172.25.10.24/32 10.0.0.1 00:03:27

*> 172.25.10.25/32 10.0.0.1 00:03:27

*> 172.25.10.27/32 10.0.0.1 00:03:27

*> 172.25.10.34/32 10.0.0.1 00:03:27

...

...

*> 172.30.100.0/24 10.0.0.1 00:03:27

Let’s break this output down:

BGP Server Configuration: this specifies the BGP ASN (autonomous system number) corresponding to the --cluster-asn flag we set earlier and the IP of this node. This is the information used by kube-router to identify itself with its BGP peers. Your Kubernetes cluster is a BGP autonomous system with a unique identifier 4206940100 in this case.

BGP Neighbors: this lists all the BGP neighbors (peers) that have established a BGP session with this node. This list should include all peers you specified with the --peer-ips and --peer-asns flag earlier.

BGP Route Info: this lists all the routes that have been advertised from this node. You’ll notice each /32 address represents a Kubernetes Service or External IP. The one /24 subnet that is advertised is the pod CIDR assigned to this node by the Kubernetes control plane.

Traffic Engineering with BGP

Now that you’ve stood up your Kubernetes cluster and each node has established a BGP session with the underlying network, we can start to do some interesting traffic engineering using BGP.

Anycast Routing for Kubernetes Services

One of the most common implementations of anycast is with ECMP routing. ECMP stands for “equal-cost multi-path”; it’s a routing strategy where packets are forwarded to one of any “best paths” in a network. How the best paths are determined depends on a lot of factors, but typically, the best path is likely the shortest path (the path with the least amount of hops). The most common example of using anycast is with global DNS services where we want one IP (think 1.1.1.1 or 8.8.8.8) to be routed to the closest site that is running a globally distributed DNS service. BGP is one of many routing protocols that allows us to implement ECMP routing.

If you’ve already configured your network to handle ECMP routing, then all that’s left to implement anycast is to advertise your anycast IP over multiple BGP peers. With Kubernetes, we can advertise service IPs to accomplish anycast routing for any of our services.

What’s nice about using Kubernetes with anycast is that there is a lot of flexibility when it comes to where packets for Services can be routed. As long as a packet arrives at a node that has IPVS / iptables rules set by kube-proxy (this should be every node), we can trust the system to do the correct network address translation to one of the many corresponding pods for your service. To avoid extra hops in your network, you can configure your service with externalTrafficPolicy=Local and kube-router will only advertise Service IPs on a node if a healthy endpoint exists. Likewise, kube-proxy will configure NAT to only the local pods.

AS Path Prepending to Modify Best Paths

kube-router supports AS (autonomous system) path prepending with BGP. Path prepending is a mechanism that lets us advertise extra hops for a route. If you choose to prepend N paths for one node in your cluster, your routers will then be aware that there are now N more hops to that node compared to the rest of the cluster. This can be extremely useful if you want to steer traffic away from a subset of nodes since ECMP will no longer consider those nodes as a best path.

Prepending AS paths for any node can be accomplished by annotating that node with the following:

$ kubectl annotate node k8s-node01 kube-router.io/path-prepend.as=<your-cluster-asn>

$ kubectl annotate node k8s-node01 kube-router.io/path-prepend.repeat-n=2

Multiple ASes per Cluster

With BGP, it's possible to have multiple ASes per Kubernetes cluster. Having multiple ASes per cluster lets you group nodes in your cluster based on the routing policies that are outlined per AS. Use cases like this are not uncommon since Kubernetes is designed for multitenancy. You may have services that need to be routable over a public network, but that doesn’t mean you want your entire cluster exposed to that public network. Maybe some network devices in your data center have different requirements, so you need special routing rules/policies for hardware connected to those devices. Enabling multiple AS with kube-router is possible. Kube-router lets us override the ASN of any node using annotations. If you’re overriding the ASN, you’ll likely need to override the peer IP and ASN of your external peers (networking devices) as well.

$ kubectl annotate node k8s-node01 kube-router.io/node.asn=<overriding ASN>

$ kubectl annotate node k8s-node01 kube-router.io/peer.ips=<custom peer ips, comma delimited>

$ kubectl annotate node k8s-node01 kube-router.io/peer.asns=<custom peer asns, comma delimited>

Dynamic Routing with BGP

BGP is a dynamic routing protocol. As the name implies, dynamic routing means that routes can be added or removed based on the state of the cluster. A key benefit of this, as opposed to static routing is being able to handle automatic failover. When your nodes lose connectivity (for reasons), so do the BGP sessions between those nodes and their BGP peers. Once the session closes, your network should adapt and learn to stop routing traffic to that node. This should significantly improve the resiliency of your cluster!

Trade-Offs

Like any technology, there will be trade-offs, and it’s important you consider these trade-offs before getting your hands dirty. Here’s a pros/cons list to help you decide if using BGP fits your use case!

Pros:

BGP lets us configure ECMP load balancing in our clusters; this is like having a cloud load balancer in your own data center.

BGP is highly configurable, so you can do a lot of cool things like AS path prepending to have finer control of the traffic in your cluster.

BGP is a dynamic routing protocol, so traffic will automatically failover when necessary.

BGP can simplify your pod network topology because there is a separation of concerns. Each node does not need to be aware of the pod network on other nodes; it only needs to advertise the routes it can consume. In some cases, this can lead to performance improvements since it removes the need for overlays or large routing tables (one rule per node).

Cons:

In a data center environment, your network infrastructure needs to be aware that your Kubernetes cluster is a BGP autonomous system. This may require extra knowledge on how to setup BGP on your network devices or getting a network engineer involved.

BGP is highly configurable and therefore has a really steep learning curve.

Managing BGP configuration on your network devices and on your Kubernetes cluster (especially if you have multiple ASes) may be operationally expensive and outweigh the benefits.

Thanks for taking the time to read this post! If you have any questions or just want to chat, you can reach me via @a_sykim on Twitter.