Based on recent discussions, I’ve noticed some confusion around external traffic policies for Kubernetes Services. This is not surprising given there’s a lot of context around this feature that can only be found by digging through many Github issues and pull requests. In this post I'll try to do a deep dive into this feature to clarify some of the important assumptions that may not be clear in the API or the documentation.

Overview

There’s a field you can configure in Kubernetes Services called externalTrafficPolicy. Here’s what you can find about it in the docs:

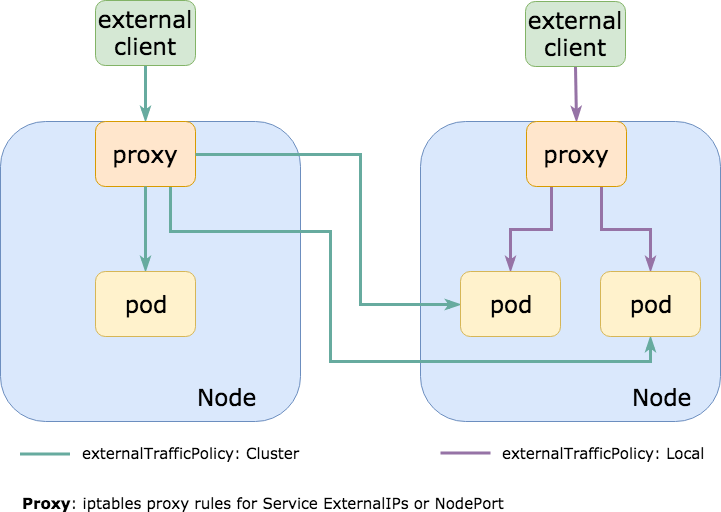

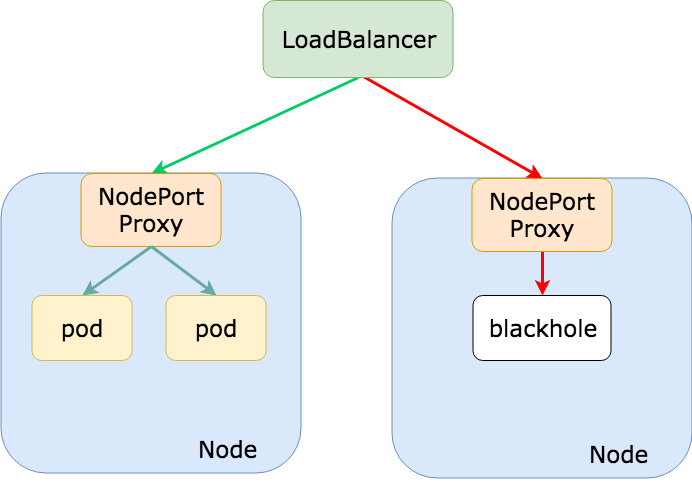

externalTrafficPolicy denotes if this Service desires to route external traffic to node-local or cluster-wide endpoints. "Local" preserves the client source IP and avoids a second hop for LoadBalancer and NodePort type services, but risks potentially imbalanced traffic spreading. "Cluster" obscures the client source IP and may cause a second hop to another node, but should have good overall load-spreading.Here’s a diagram to illustrate this a bit better:

There are pros and cons of using either external traffic policy. I hope I can outline them well below!

externalTrafficPolicy: Cluster

This is the default external traffic policy for Kubernetes Services. The assumption here is that you always want to route traffic to all pods running a service with equal distribution.

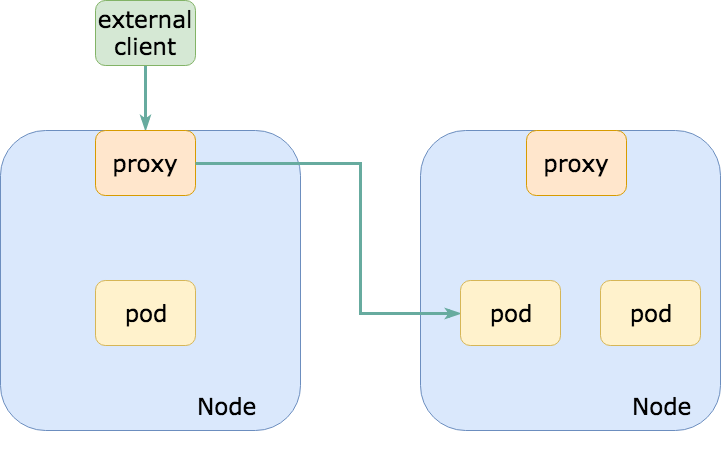

One of the caveats of using this policy is that you may see unnecessary network hops between nodes as you ingress external traffic. For example, if you receive external traffic via a NodePort, the NodePort proxy may (randomly) route traffic to a pod on another host when it could have routed traffic to a pod on the same host, avoiding that extra hop out to the network.

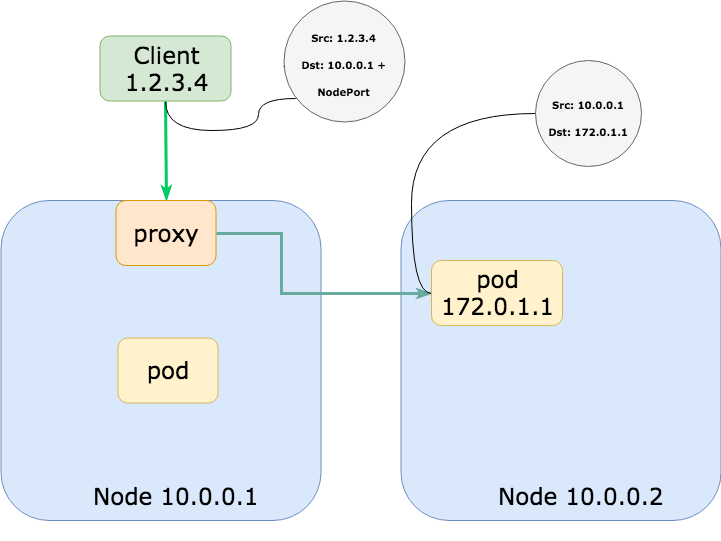

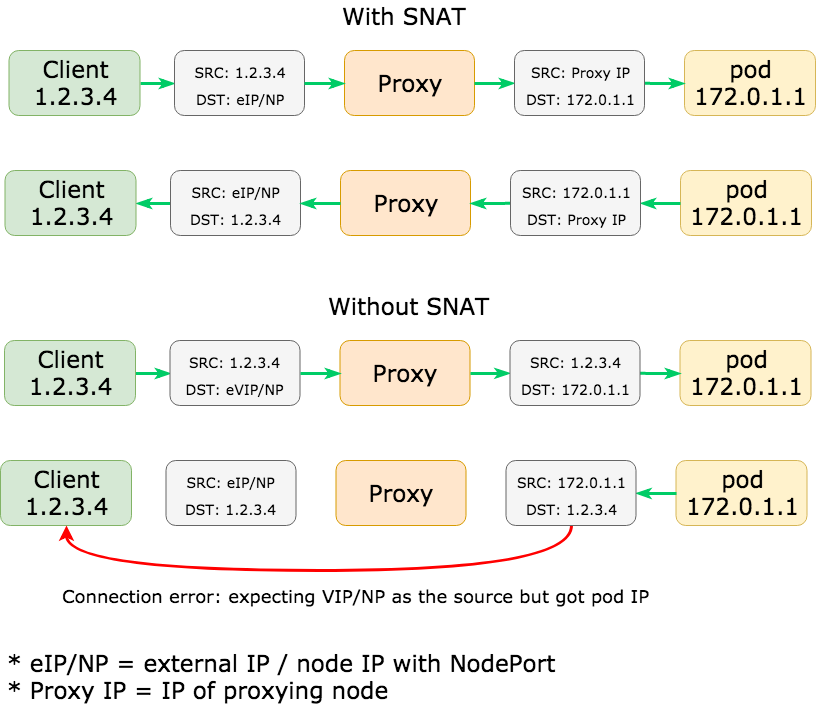

Likely a bigger problem than extra hops on the network is masquerading. As packets re-route to pods on another node, your traffic will be SNAT’d (source network address translation) so that the destination pod would actually see the proxying node’s IP instead of the true client IP. This is undesirable for many reasons which I won’t be covering in this post.

Although SNATing service traffic is undesirable, this is fundamental for the Kubernetes networking model to work. If we omit SNAT, there would be a mismatch of source and destination addresses which will eventually lead to a connection error. The mismatch occurs because the destination outgoing from client would be the node address on a NodePort (or an external IP), but the destination from the other end would be the pod IP due to the original DNAT from the proxy.

externalTrafficPolicy: Local

With this external traffic policy, kube-proxy will add proxy rules on a specific NodePort (30000-32767) only for pods that exist on the same node (local) as opposed to every pod for a service regardless of where it was placed.

You’ll notice that if you try to set externalTrafficPolicy: Local on your Service, the Kubernetes API will require you are using the LoadBalancer or NodePort type. This is because the “Local” external traffic policy is only relevant for external traffic which by only applies to those two types.

$ kubectl apply -f mysvc.yml

The Service "mysvc" is invalid: spec.externalTrafficPolicy: Invalid value: "Local": ExternalTrafficPolicy can only be set on NodePort and LoadBalancer serviceWith this architecture, it’s important that any ingress traffic lands on nodes that are running the corresponding pods for that service, otherwise, the traffic would be dropped. For packets arriving on a node running your application pods, we know that all it’s traffic will route to the local pods, avoiding extra hops to other pods in the cluster.

We can achieve this logic by using a load balancer, hence why this external traffic policy is allowed with Services of type LoadBalancer (which uses the NodePort feature and adds backends to a load balancer with that node port). With a load balancer we would add every Kubernetes node as a backend but we can depend on the load balancer’s health checking capabilities to only send traffic to backends where the corresponding NodePort is responsive (i.e. only nodes who’s NodePort proxy rules point to healthy pods).

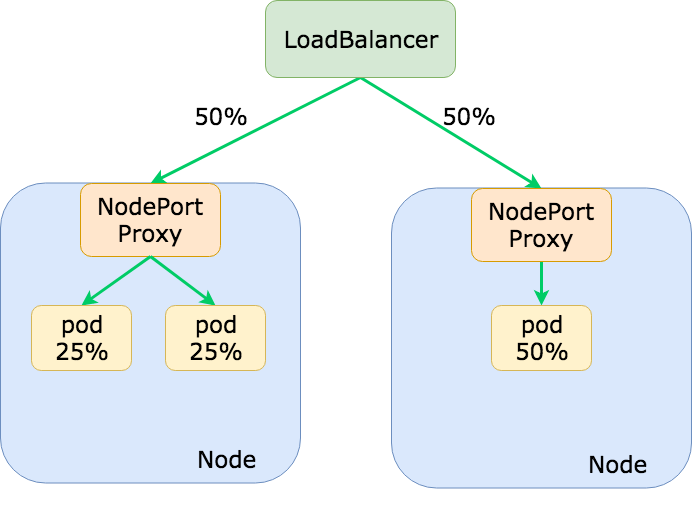

This model is great for applications that ingress a lot external traffic and want to avoid unnecessary hops on the network to reduce latency. We can also preserve true client IPs since we no longer need to SNAT traffic from a proxying node! However, the biggest downsides to using the “Local” external traffic policy, as mentioned in the Kubernetes docs is that traffic to your application may be imbalanced. This is better explained in the diagram below:

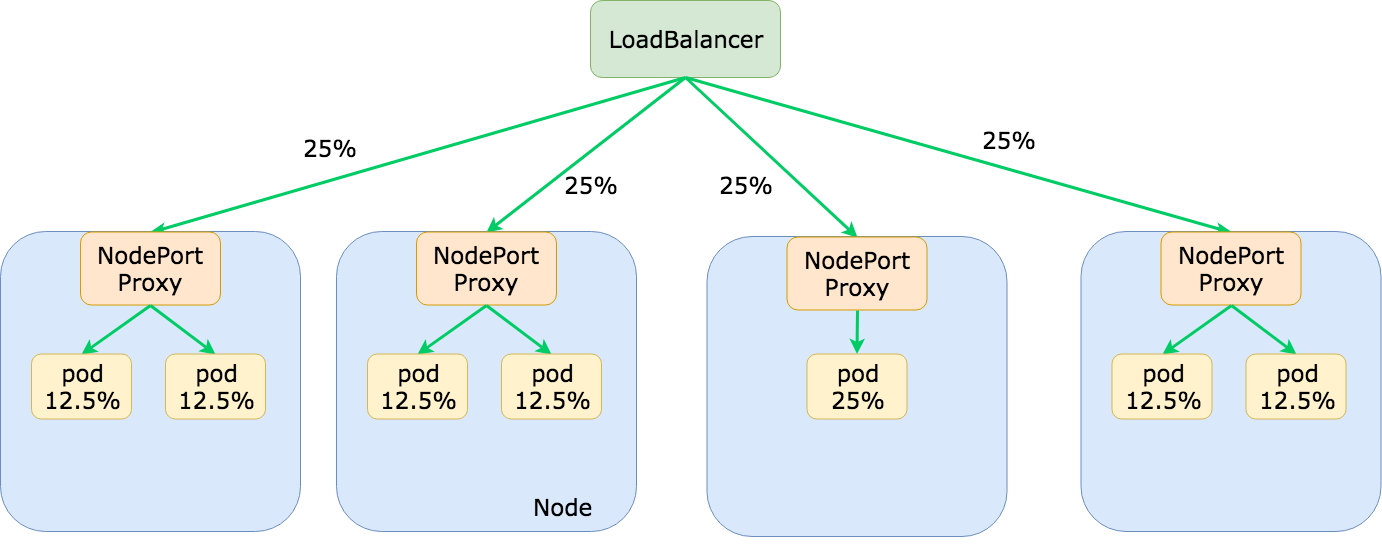

Because load balancers are typically not aware of the pod placement in your Kubernetes cluster, it will assume that each backend (a Kubernetes node) should receive equal distribution of traffic. As shown in the diagram above, this can lead to select pods for an application receiving significantly more traffic than other pods. In the future, we may see the development of load balancers that can hook into the Kubernetes API and better distribute traffic based on pod placement but I have not seen anything like that (yet). To avoid uneven distribution of traffic we can use pod anti-affinity (against the node's hostname label) so that pods are spread out across as many nodes as possible:

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values:

- my-app

topologyKey: kubernetes.io/hostnameAs your application scales and is spread across more nodes, imbalanced traffic should become less of a concern as a smaller percentage of traffic will be unevenly distributed:

Summary

From my experience, if you have a service receiving external traffic from an LB (using NodePorts), you almost always want to use externalTrafficPolicy: Local (with pod anti-affinity to alleviate imbalanced traffic). There are a few cases where externalTrafficPolicy: Cluster makes sense but at the cost of losing client IPs and adding extra hops on your network. An interesting problem related to this is internal traffic policies. As of today, in-cluster traffic (using Service's clusterIP) is always SNAT'd and often incurs that extra hop on your network. There is no equivalent of the "Local" external traffic policies for internal traffic (and perhaps for good reason) which I think is an interesting, but difficult problem to solve. If you have thoughts/opinions on this, I would love to chat (@a_sykim on Twitter)! Thanks for reading!